Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

The main points i the stochastic hydrology are listed below:Method of Maximum Likelihood, Likelihood Function, Estimates of Parameter, Chebyshev Inequality, Irrespective of Probability Distribution, Moments and Expectation, Covariance, Degree of Association

Typology: Study notes

1 / 41

This page cannot be seen from the preview

Don't miss anything!

L = f(x 1

;θ 1

;θ 2

…θ m

) x f(x 2

;θ 1

;θ 2

…θ m

) x f(x n

;θ 1

;θ 2

… θ m

estimated

3

1 1

n

i m i

f x θ θ

=

i

i

θ

Obtain the maximum likelihood estimates of the parameter

β in the pdf

4

2 2

x

β

β

β

π

−

( )

2 2 2

1 2

2

1

2

1

2 2 2

1 2

/

2

1

/2 (^) /2 2

1

n n i i n i i x x^ x

n

n n x

n n

i i

n^ x

n n^ n n

i i

L x e x e x e

x e

x e

β β^ β

β

β

β β β

β β β β

π π π

β

β

π

β π

=

=

− −^ −

−

=

−

=

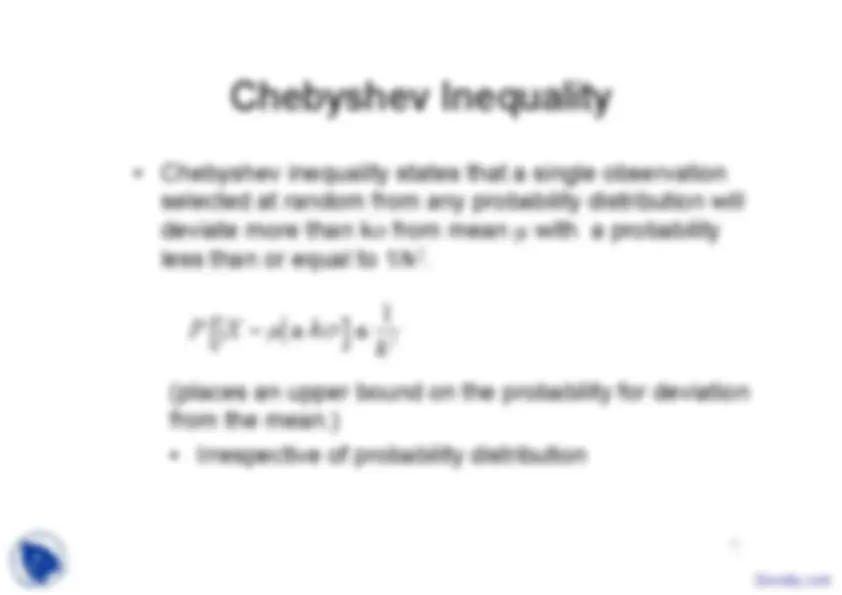

selected at random from any probability distribution will

deviate more than kσ from mean μ with a probability

less than or equal to 1/k

2 .

(places an upper bound on the probability for deviation

from the mean.)

6

2

⎡ (^) − μ ≥ σ⎤ ≤

The mean annual stream flow of a river is 135 Mm

3 and

standard deviation is 23.8 Mm

3 .What is the maximum

probability that the flow in a year will deviate more than

45 Mm

3 from the mean.

Applying Chebyshev inequality,

kσ = 45

k x 23.8 = 45

k = 1.

2

7

2

k

2

⎡ − μ ≥ σ⎤ ≤

or Cov(X, Y)

= Cov(X, Y) = 0, if X and Y are independent

9

( )( )

( )( )

1,

( , ) x y

x y

x y f x y dx dy

E x y

μ μ μ

μ μ

∞ ∞

−∞ −∞

= − −

⎡ ⎤ = − −

⎣ ⎦

∫ ∫

( )( )

1

,

1

n

i i

i

X Y

x x y y

s

n

=

− −

=

−

∑

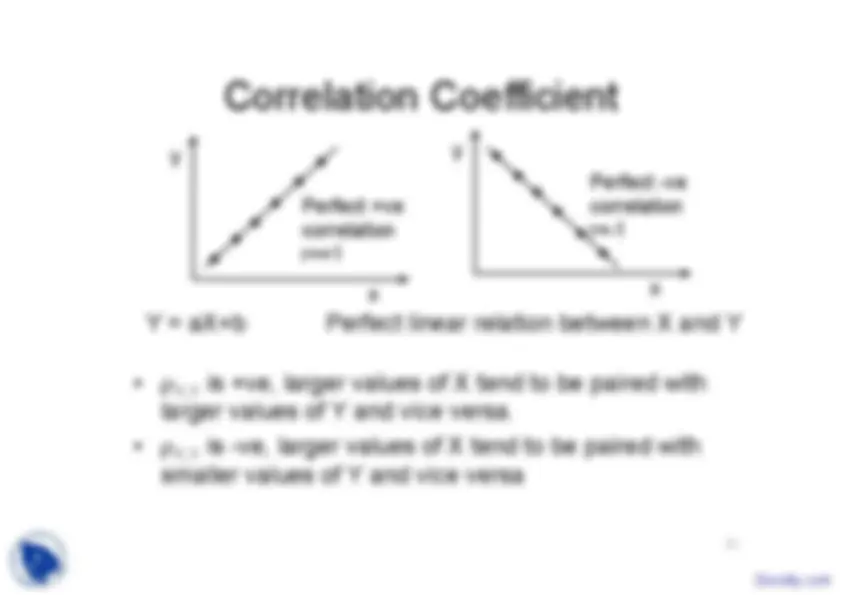

between two rvs X and Y

= 0, if X and Y are independent

10

,

,

X Y

X Y

X Y

σ

ρ

σ σ

=

,

,

X Y

X Y

X Y

s

r

s s

=

-1 < ρ X,Y

12

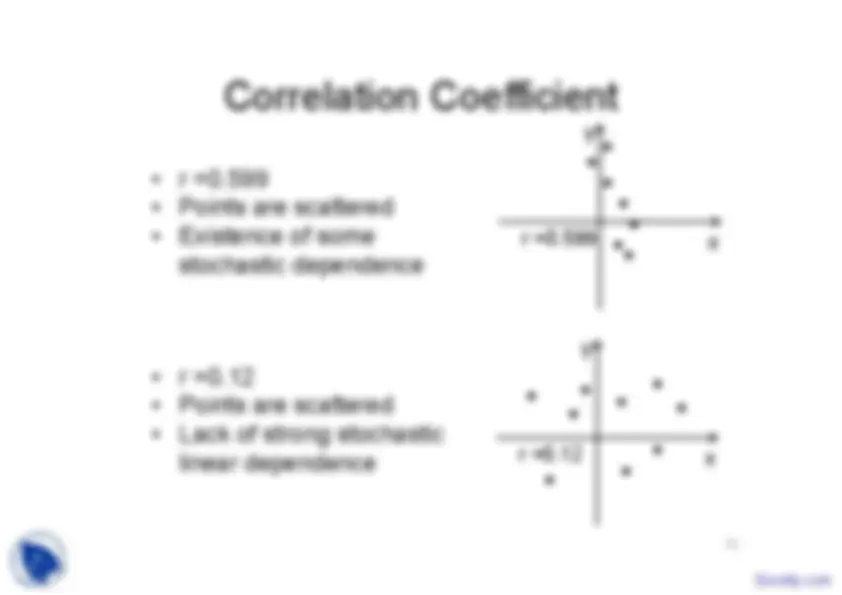

stochastic dependence

x

y

r =0.

x

y

r =0.

linear dependence

13

dependence

dependence is non linear,

a high correlation

coefficient can result

x

y

r =0.

functionally related

x

y

r =

Consider Y = aX+b ; perfect linear relation

Substitute Y = aX+b

15

,

,

X Y

X Y

X Y

σ

ρ

σ σ

=

( )

( [^ ]^ [^ ]^ [^ ])

[ ] [ ] ( )

2

, 2

, (^2 )

2

2 2

2

2

2 2

X Y

X Y

X Y

X Y

X Y

E XY E X E Y

E aX bX E X E aX b

σ

ρ

σ σ

σ σ

σ σ

=

−

=

⎡ (^) + ⎤− +

⎣ ⎦

=

ρ = +1 if there is a perfect relationship in between X and Y

Correlation coefficient is a measure of linear dependence

16

2 2 2

2 2

2 2 2 2

2 2

2 2 2

2 2 2

1

X Y

X Y

X

X X

aE X bE X a E X bE X

a E X E X

a

a

σ σ

σ σ

σ

σ σ

⎡ ⎤ (^) + − −

⎣ ⎦

=

⎡ ⎤ (^) −

⎣ ⎦

=

= =

2 2 2

Y X

Q σ = a σ

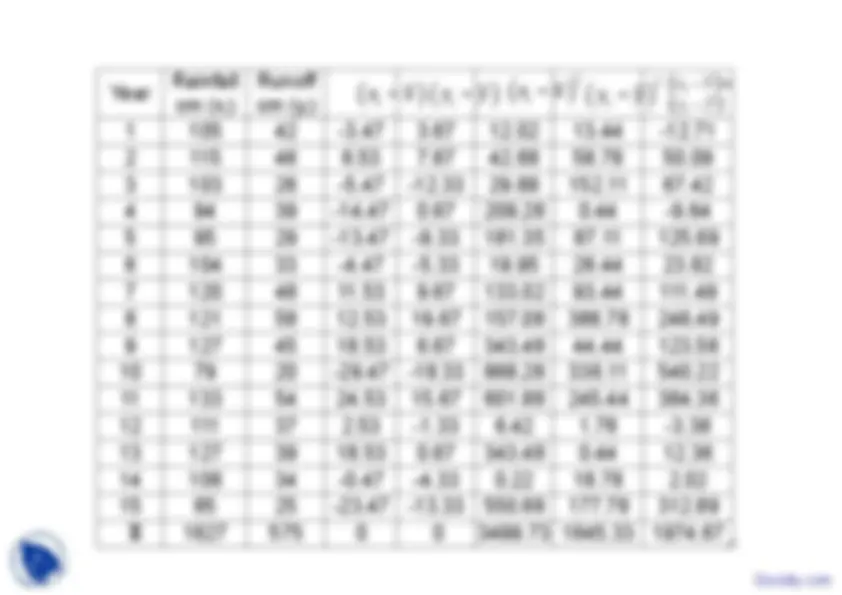

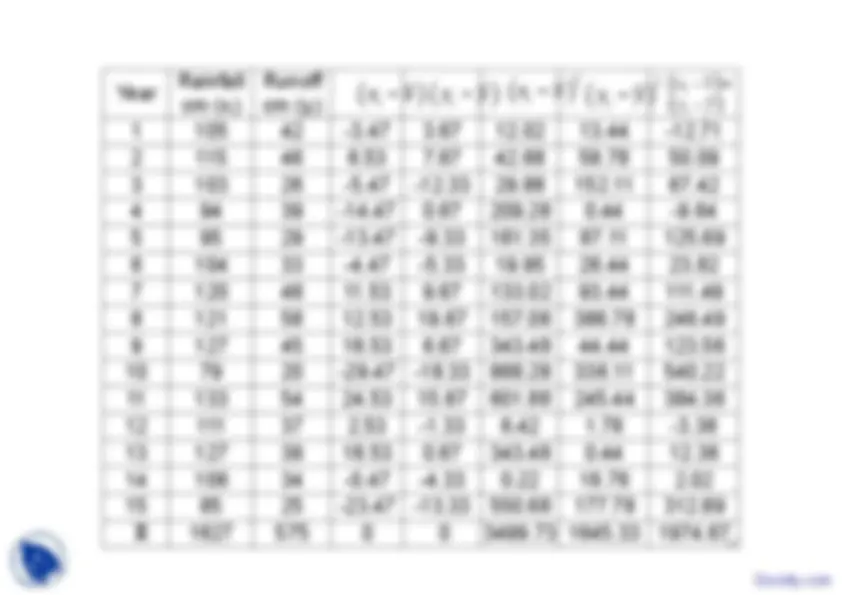

Example-3 (contd.)

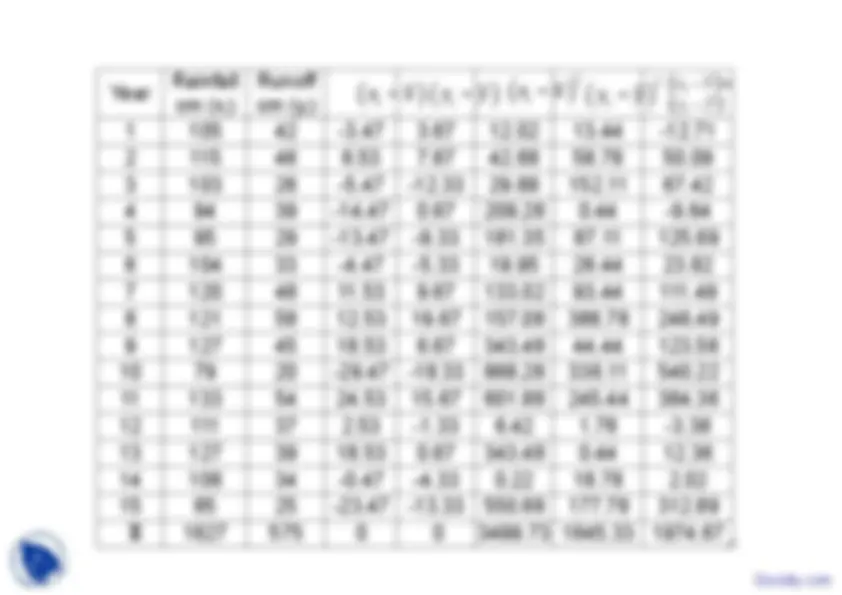

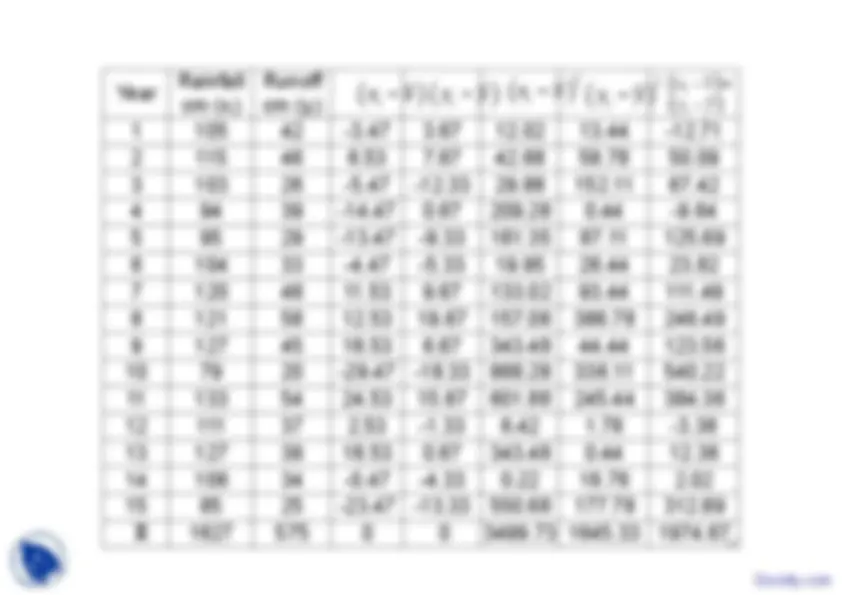

18

Mean,

Therefore mean, = 1627/

= 108.5 cm

Variance,

Standard deviation, s x

= 15.811 cm

1

n

i

i

x

x

n

=

=

1

n

i

i

x

=

x

( )

2

(^2 )

250

1 15 1

n

i

i

x

x x

s

n

=

−

= = =

− −

∑

Example-3 (contd.)

19

Mean,

Therefore mean, = 575/

= 38.33 cm

Variance,

Standard deviation, s y

= 10.841 cm

1

n

i

i

y

y

n

=

=

1

n

i

i

y

=

y

( )

2

(^2 )

1 15 1

n

i

i

y

y y

s

n

=

−

= = =

− −

∑

Example-3 (contd.)

21

Correlation coefficient,

( )( )

1

,

1

15 1

n

i i

i

X Y

x x y y

s

n

=

− −

=

−

=

−

=

∑

,

,

15.811 10.

X Y

X Y

X Y

s

r

s s

=

=

×

=

(x i

, y i

) are observed values

is predicted value of x i

Error,

Sum of square errors

22

ˆ i

y x

y

Best fit line

ˆ i i

y = a + bx

ˆ

i i i

e = y − y

( )

{ (^ )}

2 2

1 1

2

1

ˆ

n n

i i i

i i

n

i i

i

e y y

M y a bx

= =

=

= −

= − +

∑ ∑

∑

Estimate the parameters a, b such that

the square error is minimum

i

y

i

y