Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

These are the lecture Slides of Automated Planning which includes Domain-Independent Planners, Abstract Search Procedure, Planning Algorithms, Current Set of Solutions, Unpromising Members, Loop Detection, Constraint Violation etc. Key important points are: Heuristics in Planning, Classical Planning, Goal Nodes, Multiple Paths, Number of Nodes, Optimal Solution, Hill Climbing, Node-Selection Heuristic, Solution for Relaxation, Depth-First Search

Typology: Slides

1 / 20

This page cannot be seen from the preview

Don't miss anything!

Digression: the A* algorithm (on trees)

g(s)

h*(s)

u

u

2004 International Planning

Competition

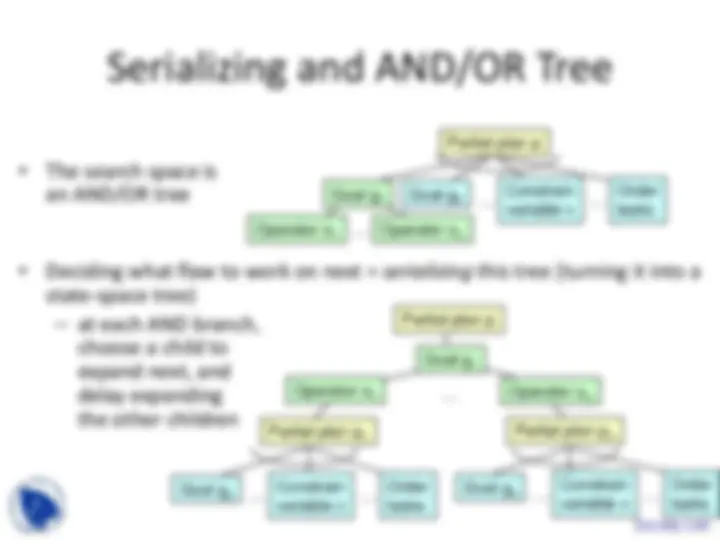

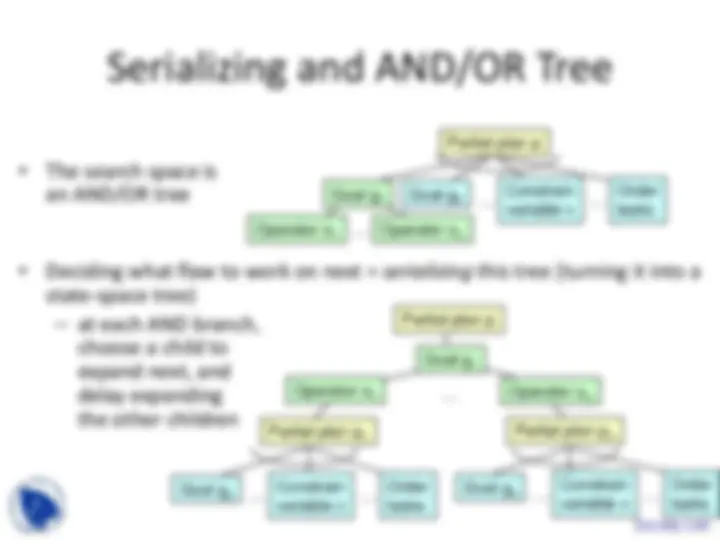

Plan-Space Planning

… …

…

Operator o 1 … Operator o n

Goal g 1 Goal g 2 Constrain variable v

Order tasks

Partial plan p

Partial plan p

Goal g 1 Operator o (^1) Operator o n

Partial plan p 1 Partial plan p n

Goal g (^2) … Constrainvariable v …^ Ordertasks Goal g (^2) … Constrainvariable v …^ Ordertasks

One Serialization

Resolver Selection